Share this

From Strategy to Reality: Building AI-Native Products

by Seven Peaks on Oct. 24, 2025

MIT’s recent State of AI in Business 2025 report declared that 95% of AI initiatives fail. And most AI projects fail not because of technology, but because teams struggle to bring them from prototype to production. The challenge isn't building AI. It's scaling safely and sustainably in real business systems.

In my experience, most failures happen not because teams lack ambition, but because they underestimate the discipline required to move from experimentation to production.

Success requires a structured approach that balances rapid experimentation with operational maturity.

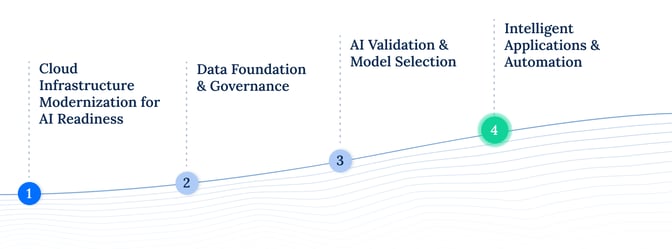

From an AI idea to a scalable product in four stages

Building an AI-native product follows a clear path from initial concept to scalable solution. This can be broken down into four distinct stages.

1. Define the Problem

The best AI initiatives start with clear pain points, not technology trends. The first step is to talk to users, review statistics, and study workflows to find where AI can create real impact.

Strong candidates share common traits like high friction, high volume, and measurable value if solved. You might look for repetitive tasks like reporting or ticket triage, information scattered across multiple sources, decision bottlenecks where teams wait for analysis, or user experience gaps in customer support and personalization.

2. Run a Proof of Concept

With a clear problem to solve, it's important to experiment quickly to validate technical feasibility before significant investment. Modern platforms make this accessible. For example, Azure AI Foundry offers multi-model playgrounds with built-in evaluation, Google AI Studio enables fast Gemini experiments that export to Vertex AI, Anthropic Console provides workbenches for refining Claude prompts with strong reasoning capabilities, and Amazon Bedrock provides multi-model testing backed by AWS enterprise trust.

"The goal here is to prove value fast while learning what works and what doesn't."

.jpg?width=1852&height=1852&name=2023_VP%20Engineering_Leif%20Mork_02%20(1).jpg)

Leif Mork

VP of Digital Products at Seven Peaks

3. Build a Minimum Viable Product (MVP)

From there, you can connect the AI into real systems and give early users access through apps, bots, or integrations. You can do this through Azure AI Foundry endpoints, Google Vertex AI's Agent Builder, Anthropic's API, OpenAI's Assistants, or Amazon Bedrock Agents. Each offers pathways to integrate with Slack, Teams, web, and mobile platforms.

Your MVP should be functional enough to gather real feedback but focused enough to build quickly.

4. Scale Safely

Finally, before you make a full production rollout, you have to add governance, monitoring, and enterprise controls. Tools like Azure Application Insights for performance monitoring, Google Vertex AI for drift detection, or Amazon Bedrock for governance help manage access and alerts. These aren't optional. They're what separates demos from enterprise systems that stakeholders trust.

For teams just starting, focus on one narrow use case where data is clean, measurable, and repeatable. Early success with one AI workflow often unlocks organisation-wide momentum.

The Shift from Suggestions to Action

Model Context Protocol: A new standard

Imagine asking an AI: "Book Mission Impossible at the nearest cinema to me between 6–9 PM." Last year, AI would have responded with links to the theater website and suggestions for showtimes. Today, with Model Context Protocol (MCP), AI can complete the booking.

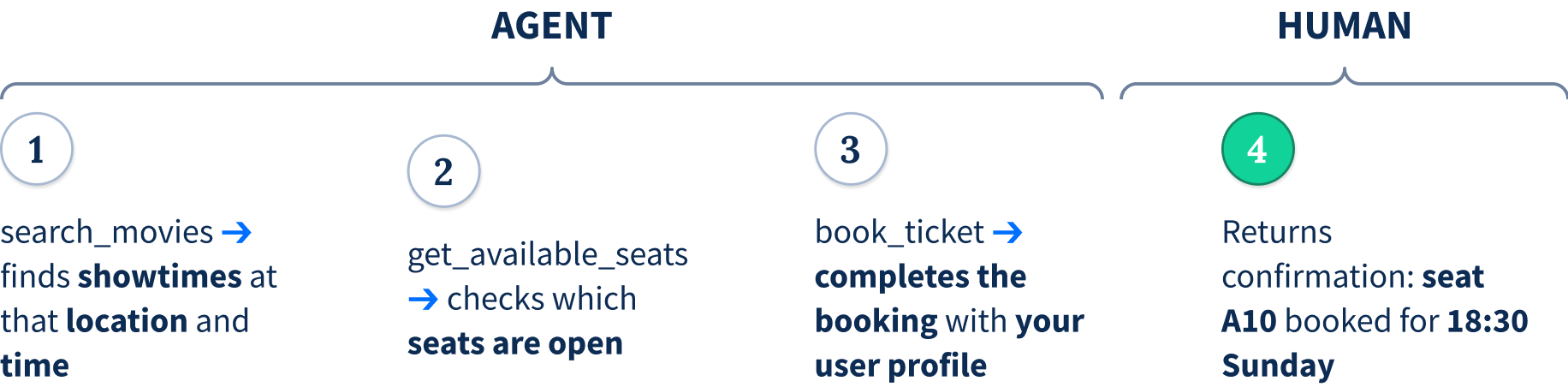

MCP represents a major shift in how AI interacts with systems. Rather than AI that just suggests actions, MCP lets AI execute them directly through structured, discoverable, and safe interfaces. Here's how it works with our movie booking example.

How the Agent Thinks and Acts

When you make that booking request, the MCP-powered agent first discovers what actions are available. It understands it can search_theaters, search_movies, get_available_seats, and book_tickets. This self-discovering capability means the AI doesn't need hardcoded instructions for every possible task.

|

|

|

The agent begins its planning. It needs to find the right movie at the right location, during the specified time window. It executes search_movies, which returns Mission Impossible showtimes at the nearest theater to you. There's a 6:30 PM and an 8:15 PM showing, and both are within your window.

Through its execution layer, the agent calls get_available_seats for the 6:30 PM showing. The theater's system returns a seat map. The agent's memory recalls your past preferences: you typically choose aisle seats in the middle section.

Before finalizing, the agent employs human-in-the-loop refinement: "I found two showtimes. The 6:30 PM showing has better seat availability in your preferred section. Should I book seats A10 and A12?" You confirm. The agent executes book_tickets with your profile, and the system returns confirmation.

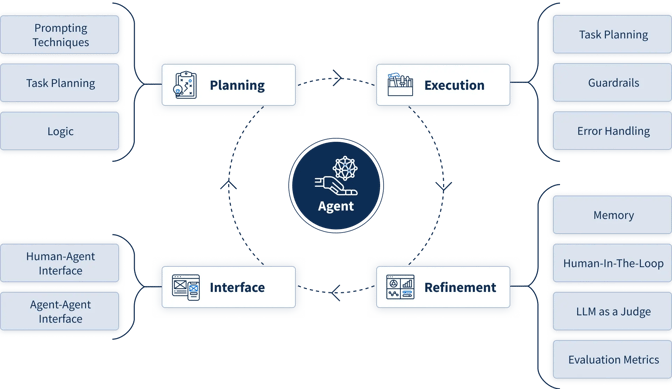

Agentic Workflow Structure

Throughout this workflow, guardrails provide safety. The agent can't book without confirmation on purchases above a threshold. Error handling catches issues like sold-out shows. Rate limits prevent accidental multiple bookings. These structured components (planning logic, execution through tools, refinement mechanisms, and protective guardrails) form the architecture of reliable agentic systems.

Autonomy vs. Assistance

This new capability raises an important question: when should AI act autonomously versus assist? Our movie booking example works because it's structured and repeatable, the outcome can be validated automatically, and users want to save time by offloading the entire task.

Not every scenario suits autonomy. If you were booking a corporate event with budget implications, planning a medical procedure, or negotiating a contract, you'd want assistive AI, augmenting your decision-making rather than executing on your behalf. Those situations involve legal, financial, or human impact requiring judgment and approval.

AI now can take action through MCP, should it assist the user, or act on their behalf?

Autonomous AI |

Assistive AI |

|---|---|

|

execute on your behalf, the AI decides and acts, within guardrails |

augments human decision-making, the AI suggests, you decide |

|

The task is structured, rule-based, or repeatable

The outcome can be validated automatically

|

The outcome has legal, financial, or human impact

The task needs human judgement or review

|

The smartest organizations blend both approaches strategically, letting AI execute where appropriate while keeping humans engaged where it matters most.

The Future is Headless with a Structured Foundation

To support agentic workflows through MCP, your architecture must be intentional and decoupled.

Structured API Layer

This means designing clean, consistent, and versioned REST or GraphQL endpoints. An action-first design is best, exposing meaningful business actions rather than just CRUD operations. It's also important to define clear input-output contracts to eliminate guesswork and to implement security where the AI functions as a user that requires authentication, rate limits, and audit trails.

Decoupled Integration Strategy

To maintain flexibility, all model interactions should be routed through your own backend or API gateway, never allowing frontend teams to call AI vendors directly. By exposing AI services through clean, versioned APIs that abstract complexity, you can wrap LLM features in SDKs for frontend use. All traffic still flows through your APIs, and using event streams or webhooks enables async flows where downstream systems can react to AI outcomes without direct coupling.

This approach lets your SDK or platform layer handle retries, rate limits, and model-specific quirks, freeing frontend teams to build features instead of managing infrastructure complexity.

A Guide for Embedding AI Features into Your Systems and Apps

in a way that's scalable, decoupled, and safe for teams to adopt quickly

Expose AI Services via Clean, Versioned APIs

Route all model interactions through your own backend or API gateway

Wrap LLM Features in SDKs for Frontend Use

Let Mobile & Web teams use AI without handling prompts, tokens, retries or model details

Use Event Streams or Webhooks for Async, Loosely Coupled Flows

Let downstream systems react to AI outcomes without direct coupling

Handle Retries, Rate Limits, and Model-Specific Quirks Inside Your SDK or Platform Layer

Frontend teams should not manage infra-level complexity

Strategic Benefits

Because teams stay decoupled, AI upgrades don't require frontend rework. Models become plug-and-play, and you can swap providers behind a stable interface without disrupting consumers. Security and observability are also centralized since everything runs through your platform.

In short, AI should feel like any other backend capability: versioned, monitored, and abstracted, not a direct line to a vendor API.

The Four Pillars of Success

Building intelligent systems requires investments across four pillars.

Building the Next Generation

of Intelligent Systems

1. Cloud Infrastructure Modernization for AI Readiness

This begins with upgrading cloud infrastructure to achieve the performance and capabilities AI demands. Models require compute power, storage, and responsive systems that legacy architectures often can't provide.

2. Data Foundation & Governance

A solid data foundation is also critical, which involves securing, organizing, and preparing data for AI use. This includes establishing quality standards, implementing access controls, maintaining compliance, and creating clear data lineage. After all, AI is only as good as the data it works with.

3. AI Validation & Model Selection

Careful AI validation and model selection are also necessary. You need to test, evaluate, and choose the right models for your specific context, not just the trendiest options. This means measuring accuracy on representative data, assessing cost-performance tradeoffs, and validating for bias and reliability.

4. Intelligent Applications & Automation

Finally, the goal is to deliver intelligent applications through the structured APIs and SDKs described earlier, powered by automation and AI working together.

Building for The Long Term

Building AI-native products takes time. Intelligent applications require strategy, structure, and collaboration. Shortcuts only lead to fragile solutions that break under real-world pressure.

Success comes from starting with clear problems rather than searching for AI applications, validating thoroughly before scaling, and building on solid technical foundations. This requires approaching AI as a strategic initiative with cross-functional collaboration between product, engineering, data, and business teams.

This approach isn't about adding AI features to check a box. It's about rethinking how work gets done, how customers are served, and how value is created, using AI to drive transformation.

Over the next few years, we’ll see the shift from adding AI features to building AI-first foundations. The organizations that succeed will treat AI not as a tool, but as an architectural mindset.

Ready to Build?

If you're ready to move from ambitious AI ideas to intelligent apps in production, the process starts with the right strategy and execution. At Seven Peaks Software, we’ve seen how the right structure and governance can turn ambitious AI ideas into lasting enterprise solutions. The journey from concept to production is complex, but with the right foundation, it’s achievable.

Contact us at sales@sevenpeakssoftware.com to start building your intelligent app.

Follow us onFacebook and LinkedIn to stay up to date on our upcoming events.

Share this

- Product Development (87)

- Service Design (67)

- Data Analytics (54)

- Product Design (52)

- Industry Insights (48)

- AI Innovation (43)

- Career (32)

- Product Discovery (30)

- Product Growth (28)

- Quality Assurance (28)

- Cloud Services (25)

- Events (24)

- PR (9)

- CSR (7)

- Data (3)

- AI (1)

- Digital Product (1)

- InsurTech (1)

- January 2026 (1)

- December 2025 (6)

- November 2025 (6)

- October 2025 (4)

- September 2025 (4)

- July 2025 (2)

- June 2025 (9)

- May 2025 (5)

- April 2025 (2)

- March 2025 (3)

- February 2025 (3)

- January 2025 (3)

- December 2024 (6)

- November 2024 (4)

- September 2024 (4)

- August 2024 (3)

- July 2024 (6)

- April 2024 (1)

- March 2024 (7)

- February 2024 (14)

- January 2024 (12)

- December 2023 (9)

- November 2023 (9)

- October 2023 (2)

- September 2023 (7)

- August 2023 (6)

- June 2023 (4)

- May 2023 (4)

- April 2023 (1)

- March 2023 (1)

- November 2022 (1)

- August 2022 (4)

- July 2022 (1)

- June 2022 (5)

- April 2022 (6)

- March 2022 (4)

- February 2022 (8)

- January 2022 (4)

- December 2021 (1)

- November 2021 (2)

- October 2021 (2)

- September 2021 (1)

- August 2021 (3)

- July 2021 (1)

- June 2021 (2)

- May 2021 (1)

- March 2021 (4)

- February 2021 (5)

- December 2020 (3)

- November 2020 (1)

- June 2020 (1)

- April 2020 (1)

- January 1970 (1)

.jpg?width=500&height=500&name=2023_VP%20Engineering_Leif%20Mork_02%20(1).jpg)